have you tried to set the clock manually for each 3060ti by pressing on the “Run command” button?  .

.

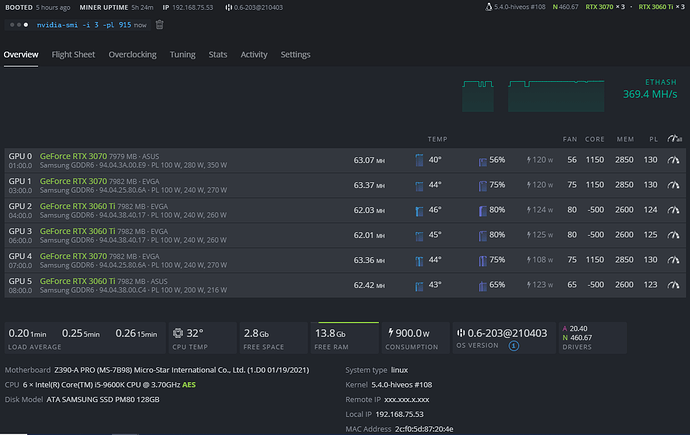

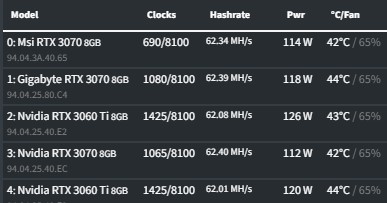

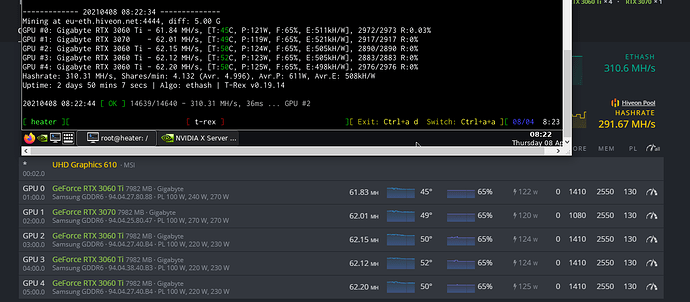

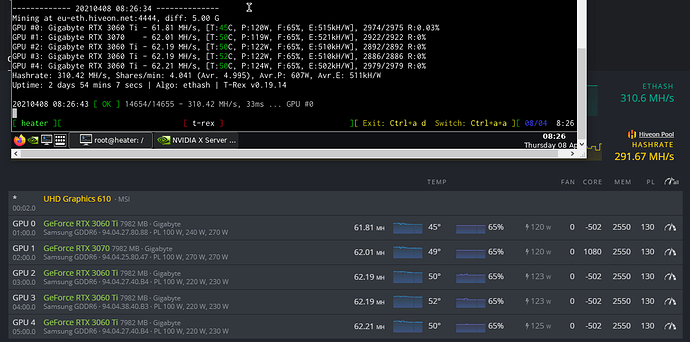

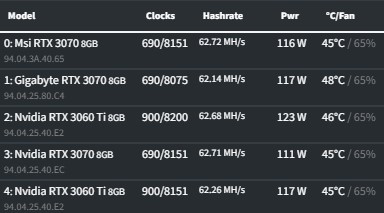

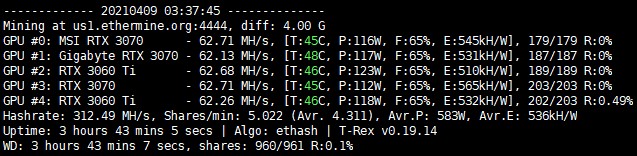

I set the clock on 915Mhz for each of them with “nvidia-smi -i 0 -lgc 915” where the number after “-1” corresponds with gpu number. I set the PL to 126Watt. I get the exact same hashrate as the 3070